Train like we fight, fight like we train. Currently, the Air Force is exploring the use of Virtual Reality (VR), however, this does not incorporate the use of a real aircraft. A solution to this problem may be to use portions of a synthetically generated environment embedded into one’s vision of a real world environment. Embedding portions of the virtual world onto a pilot’s visor, whilst airborne, would be more advantageous in producing higher quality training. The solution to do this is to use Augmented Reality (AR). This new vector towards the airborne environment could enable a paradigm shift in aviation training.

As an Air Force recognised Elite Sportsperson (Pilot) for the sport of Reno Air Racing in the jet class, I have often wondered how we can practice safely in the absence of another training partner. Then it occurred to me: “Why do we even need another physical aircraft at all? What if there was some way of superimposing another aircraft onto our helmet visors so we have a synthetically generated training partner?” I then thought about how the ADF could benefit and came up with this blog.

The predominant approach to the ADF’s pilot training, across all platforms, is actually flying the real aircraft and conducting all exercises in it. However, it is expensive in terms of dollars, manpower, time, effort and resources. To provide such training requires an enormous budget, lots of assets and people to achieve the required outcome.

Currently, the Air Force is exploring the use of Virtual Reality (VR) only, for training using no real aircraft and this can be sufficient for learning some skills. However, for certain skills, it may be better to place the Virtual World in the actual real aircraft’s cockpit as this would be more advantageous in producing higher quality training. The solution to do this is to use Augmented Reality (AR).

What’s the difference between VR and AR?

VR can be best described as an artificial digital environment that completely replaces the real environment where a person is submerged in a digitally created world. Audio-visual sensory input, and in some cases, even other sensory stimulants are completely created by a digital device and delivered, in most cases, to the person via a headset.

AR, on the other hand, is quite popular these days, but many people are not able to make the distinction between AR and VR. The main difference is that AR is ‘layered’ on top of the real world, where it can have many shapes and forms. The most common ones are videos, images, and other interactive data types embedded with one’s perception of the real world. AR can be used to enhance the real-world experience of the user. Another key difference is that AR can be delivered through smart glasses, headsets and portable devices. So why not through a pilot’s visor?

Is this the solution?

Where VR replaces your vision, AR adds to it. AR devices, such as the Microsoft HoloLens and various enterprise-level “smart glasses,” are transparent, letting you see everything in front of you as if you are wearing a weak pair of sunglasses. The technology is designed for free head movement, while embedding images over whatever the user is viewing.

Current AR Applications

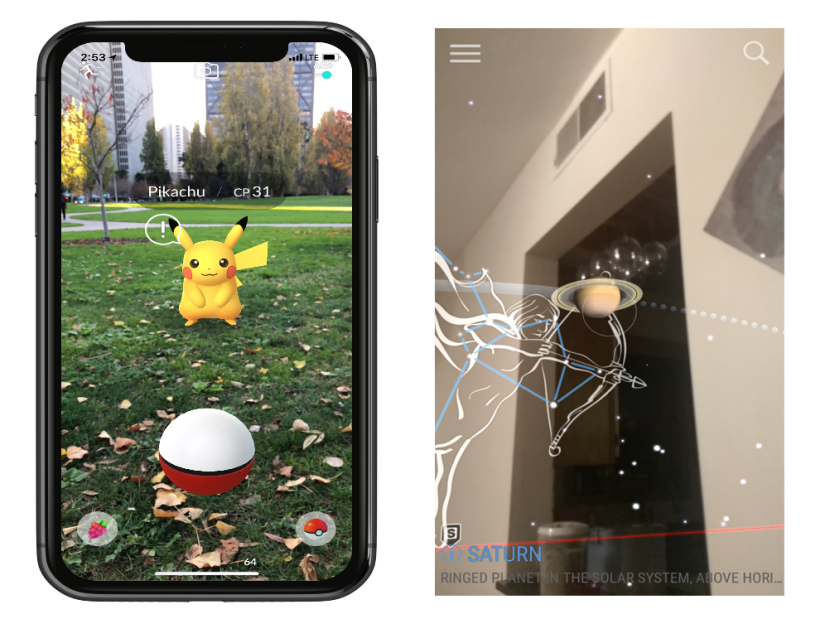

The concept extends to smartphones with AR apps and games, such as Pokemon Go (Fig 1a), which use a phone’s camera to track surroundings and overlay additional information on top of what is displayed on the screen. The SkyView app is another example (Figure 1b).

AR displays can offer something as simple as a data overlay that shows the time, to something as complicated as holograms floating in the middle of a room. Pokemon Go and SkyView synthetically project an image onto a screen on top of whatever the camera is viewing. The HoloLens and other smart glasses, on the other hand, let you virtually place floating app windows and 3D images around you.

Figure 1: (Left) Pokemon Go; (Right) SkyView App.

Current pilot training systems

Current technologies allow a pilot to be trained via a real aircraft up in the sky or on the ground in a simulator, which provides VR experience (See Figure 2).

A simulator on the ground provides a medium for pilots to practice manoeuvres, e.g. procedures, Air-to-Air and Air-to-ground combat, refuelling, formation flying and emergencies etc, and is less expensive than flying a real aircraft in the air. However, a pilot misses out on the experience and additional sensory stimulations of actually being in the sky such as G-forces, the ‘seat-of-your-pants’ feelings and all the other facets of actually flying a real aircraft i.e. navigation, meteorological effects, physiological effects, flight dynamics, formation, real world communication, operating aircraft systems and switchology, unplanned flight deviances etc all whilst airborne. Being in the air elicits physiological and psychological muscle memory reactions and experiences.

Physically flying a real plane is different from flying a simulator. Simulators are great at providing procedural training, whereas flying a real aircraft provides the actual physical airborne environment where mistakes are best kept at a minimum.

Figure 2. (Top) Pilot in a real aircraft up in the sky. (Bottom) Pilot on the ground in a simulator.

An F-35 helmet is a great leap forward in technology in providing a pilot with unparalleled Situational Awareness (SA) not before seen; AR can build on this technology (see Figure 3).

Figure 3. F-35 Helmet with enhanced SA information.

Could this be the solution?

My idea for AR is for a student to not just operate in a simulator with a set of VR goggles on and immerse themselves within the VR world but placing that student in a real aircraft and having a VR world superimposed on what they see through their visor.

This would be achieved by synthetically injecting virtual entities into the real world, up in the sky, and have them manoeuvre in relation to both virtual and real airplanes (see Figure 4).

The student is physically flying the aircraft performing all the tasks required, but through a computer program on-board the aircraft, once initiated, various fictitious ground targets, RedAir threats, friendlies or even tanking can be superimposed onto the student’s visor.

Figure 4. Images obtained from the Red6AR website (Red6, 2021).

Whereas VR creates an entirely new synthetic world around you, AR adds images to your real world and surroundings that aren’t there in real life. For instance, showing an aircraft against the actual sky instead of creating both the airplane and the sky in a VR environment. The idea is for pilots on real aircraft in the sky make it seem like they are actually flying with, or against, simulated aircraft.

Outside of the visual range (Beyond Visual Range – BVR), a synthetic target can be generated within the aircraft’s RADAR system and displayed on the aircraft’s RADAR display and as such the student then has to decide on the best course of action to locate, identify and track or even prosecute that target. However, when the target gets Within Visual Range – (WVR), the computer system on board the aircraft will synthetically superimpose the target onto the students helmet visor with the scale varying according to the range of the target, and the student will be required to manoeuvre accordingly - whether it be to prosecute RedAir or ground threats, to formate or work as a package with friendlies or even carry out an Air-to-Air tanking exercise (see Figure 5).

Figure 5. AR Air-to-Air tanking exercise (Red6, 2021).

There’s no substitute for actually flying a real aircraft in the sky. Unlike in a simulator, strapping into an aircraft and actually feeling pressures, e.g. G Forces, possibility of running out of fuel, crashing into the ground, etc., the cognitive load on a pilot’s brain is massively increased when you are actually flying.

Possibility to reality

Through AR pilots still fly the aircraft, whilst at the same time having to utilise and visualise navigation systems, work with air-traffic control, experience weather, understand rough terrains all in a real world airspace environment and at the same time execute time critical mission data and objectives. AR applications will help pilots avoid making mistakes and train them to make the right decisions at the right time in a time-compressed environment.

Fortunately there is a US company (Red6, 2021) already exploring this idea, so a Commercial off-the-shelf (COTS) purchase maybe the solution.

If this is achievable, the value of this efficiency would be felt in all cost domains, such as reduced training burden on squadron personnel and aircraft, as well as minimise the amount of resources, logistics and fatigue both on personnel and aircraft required to achieve a desired outcome. This addition to the training environment will value add to a students’ learning thereby increasing the value of mission training objectives and reduce costs to the ADF.

Comments

Ex back

I really want to thank Dr Emu for saving my marriage. My wife really treated me badly and left home for almost 3 month this got me sick and confused. Then I told my friend about how my wife has changed towards me. Then she told me to contact Dr Emu that he will help me bring back my wife and change her back to a good woman. I never believed in all this but I gave it a try. Dr Emu casted a spell of return of love on her, and my wife came back home for forgiveness and today we are happy again. If you are going through any relationship stress or you want back your Ex or Divorce husband you can contact his email emutemple@gmail.com or WhatsApp +2348071657174

Https://web.facebook.com/Emu-Temple-104891335203341

Services Offered

After reading many testimonials about authentic lottery spell casters and spiritual guidance for financial luck, I felt called to reach out. Dr. Alaska welcomed me with patience, listened to my journey, and performed a lottery spell aligned with positive energy and divine timing. I was also given numbers to play as part of the spiritual guidance.

When I checked the results and realized I had won $3,400,000, I was overwhelmed with gratitude. This experience reaffirmed my belief that spiritual forces, when guided by a genuine and gifted practitioner, can open doors to abundance and transformation.

Spiritual Services Offered:

Love Attraction & Relationship Harmony

Bring Back Lost Lover

Business & Financial Blessings

Court Case & Legal Success

Protection From Negative Energy & Evil Forces

Lottery & Luck Enhancement

Dr. Alaska’s work is rooted in sincerity, spiritual wisdom, and respect for each person’s path. I am truly thankful for this blessing and highly recommend reaching out to those seeking positive change.

Contact: alaskaspellcaster44@gmail.com

DR UYI HELP ME WIN LOTTERY ALL THANKS TO YOU DR UYI

I had to write back and say what an amazing experience I had with Dr Uyi for his powerful lottery spell. My Heart is filled with joy and happiness after he cast the Lottery spell for me, And i won $750,000,000 His spell changed my life into riches, I’m now out of debts and experiencing the most amazing good luck with the lottery after I won a huge amount of money. My life has really changed for good. I won (seventy five thousand dollars)Your Lottery spell is so real and pure. Thank you very much for the lottery spell that changed my life” I am totally grateful for the lottery spell you did for me Dr Uyi and i say i will continue to spread your name all over so people can see what kind of man you are. Anyone in need of help can email Dr Uyi for your own lottery number, because this is the only secret to winning the lottery. Email: (drzukalottospelltemple@gmail.com) OR WhatsApp on +17174154115

MY HEART IS FULL OF GRATITUDE OF WHAT DR UYI DID FOR ME BY HELPI

It’s an honor to share this testimony to the world. Dr UYI you're a blessing to our generation. I have always desired to win lottery some day, at some point I thought it was something impossible, until I came across series of testimonies on how had helped a lot of people win lottery, at first I was so skeptical about it because I haven't seen a things as such in my life, at a second thought I decided to give it a try so I emailed him via his person email, he replied me and instructed me on what to do, after 24 hours he gave me some numbers and I won $2 million just like that. Dr UYI I owe everything for making me the man that I am right. If you need his help below is his contact information, he can help too Email: drzukalottospelltemple@gmail.com OR WhatsApp on +17174154115

THE HACK ANGELS RECOVERY EXPERT // THE BEST RECOVERY EXPERTS

THE HACK ANGELS RECOVERY EXPERT // THE BEST RECOVERY EXPERTS

I cannot express enough gratitude for THE HACK ANGELS RECOVERY EXPERT. They are truly the leading experts in the field and deserve every bit of praise for recovering cryptocurrency wallets when all hope seems lost. Thanks to their intervention, I can finally reclaim peace of mind and focus on rebuilding my financial future with renewed confidence. I highly recommend anyone in a similar situation, details below to get in touch with them right now.

web: https://thehackangels.com

Mail Box; support@thehackangels.com

Whatsapp; +1(520)-200,2320

thank u dr oseremen

𝐀𝐫𝐞 𝐘𝐨𝐮 𝐅𝐞𝐞𝐥𝐢𝐧𝐠 𝐋𝐞𝐭 𝐃𝐨𝐰𝐧 𝐁𝐲 𝐘𝐨𝐮𝐫 𝐋𝐨𝐯𝐞𝐫, 𝐀𝐫𝐞 𝐘𝐨𝐮 𝐎𝐧 𝐀 𝐁𝐫𝐢𝐧𝐤 𝐎𝐟 𝐃𝐢𝐯𝐨𝐫𝐜𝐞 𝐎𝐫 𝐀𝐥𝐫𝐞𝐚𝐝𝐲 𝐃𝐢𝐯𝐨𝐫𝐜𝐞𝐝? 𝐀𝐫𝐞 𝐘𝐨𝐮 𝐒𝐚𝐝𝐝𝐞𝐧𝐞𝐝 𝐎𝐫 𝐃𝐞𝐩𝐫𝐞𝐬𝐬𝐞𝐝 𝐃𝐮𝐞 𝐓𝐨 𝐓𝐡𝐞 𝐒𝐞𝐩𝐚𝐫𝐚𝐭𝐢𝐨𝐧 𝐖𝐢𝐭𝐡 𝐘𝐨𝐮𝐫 𝐏𝐚𝐫𝐭𝐧𝐞𝐫, 𝐀𝐫𝐞 𝐘𝐨𝐮 𝐃𝐢𝐬𝐚𝐩𝐩𝐨𝐢𝐧𝐭𝐞𝐝 𝐈𝐧 𝐖𝐡𝐚𝐭 𝐇𝐞/𝐒𝐡𝐞 𝐈𝐬 𝐃𝐨𝐢𝐧𝐠. 𝐘𝐨𝐮 𝐅𝐞𝐞𝐥 𝐁𝐫𝐨𝐤𝐞𝐧𝐡𝐞𝐚𝐫𝐭𝐞𝐝 𝐋𝐨𝐧𝐞𝐥𝐲 𝐄𝐦𝐨𝐭𝐢𝐨𝐧𝐚𝐥𝐥𝐲 𝐃𝐢𝐬𝐜𝐨𝐧𝐧𝐞𝐜𝐭𝐞𝐝. 𝐅𝐫𝐮𝐬𝐭𝐫𝐚𝐭𝐞𝐝 𝐁𝐞𝐜𝐚𝐮𝐬𝐞 𝐎𝐟 𝐀 𝐁𝐫𝐞𝐚𝐤 𝐔𝐩/𝐄𝐱𝐭𝐫𝐚𝐦𝐚𝐫𝐢𝐭𝐚𝐥 𝐀𝐟𝐟𝐚𝐢𝐫, 𝐋𝐢𝐟𝐞 𝐈𝐬 𝐓𝐨𝐨 𝐒𝐡𝐨𝐫𝐭 𝐓𝐨 𝐁𝐞 𝐔𝐧𝐡𝐚𝐩𝐩𝐲. 𝐓𝐡𝐢𝐧𝐤 𝐀𝐛𝐨𝐮𝐭 that. 𝐘𝐨𝐮 𝐃𝐞𝐬𝐞𝐫𝐯𝐞 𝐔𝐭𝐦𝐨𝐬𝐭 𝐇𝐚𝐩𝐩𝐢𝐧𝐞𝐬𝐬 𝐀𝐧𝐝 𝐋𝐨𝐯𝐞. 𝐓𝐡𝐢𝐬 𝐈𝐬 𝐘𝐨𝐮𝐫 𝐒𝐨𝐥𝐮𝐭𝐢𝐨𝐧⬇️⬇️⬇️

What'sApp 2348153622587

Oseremenspelldr@gmail. com

Add new comment